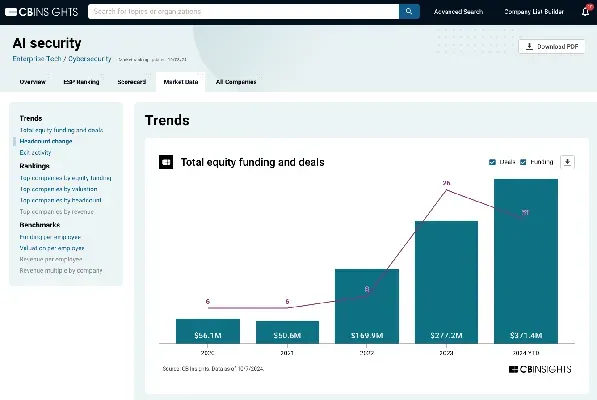

AI security funding has emerged as a critical topic within the rapidly evolving landscape of artificial intelligence. Recently, the AI security firm Irregular successfully raised $80 million in a funding round led by Sequoia Capital, signaling a growing recognition of the importance of robust security measures in AI technologies. As AI models continue to advance, the need for effective AI vulnerability detection and rigorous AI model evaluation becomes more pressing than ever. Irregular’s innovative approach to identifying potential risks in AI systems demonstrates the urgent demand for investment in AI security solutions. This influx of capital not only enhances the capabilities of firms like Irregular but also underscores the broader trend of prioritizing cybersecurity in the field of artificial intelligence.

Funding for artificial intelligence security is gaining traction, reflecting an industry-wide commitment to bolstering defenses against emerging threats. Notably, Irregular, a prominent player in evaluating AI systems, recently secured $80 million, highlighting the increasing importance of AI safeguarding measures. This financial backing, led by reputable venture capital firms such as Sequoia Capital, suggests a shift towards understanding and addressing the vulnerabilities inherent in AI technologies. With models becoming adept at spotting security flaws, the need for strategic investment in AI protections has never been more critical. As the landscape continues to evolve, the focus on sustaining secure AI environments will be paramount to ensuring the integrity of these cutting-edge systems.

AI Security Funding Trends: A Game Changer

The recent $80 million funding led by Sequoia Capital for Irregular represents a significant trend in the AI sector. As artificial intelligence continues to advance, securing these technologies is becoming increasingly critical. Investors are recognizing the need for robust AI security solutions that can handle the complex vulnerabilities inherent in today’s models. This funding round not only demonstrates trust in Irregular’s innovative approach but also highlights the increasing urgency for comprehensive AI security measures to protect against new forms of risks.

Moreover, funding for companies focused on AI security reflects a broader recognition of the threats posed by advanced AI systems. With firms like OpenAI enhancing their internal security protocols, there is a clear market demand for specialized solutions that can effectively evaluate and detect vulnerabilities in AI models. The involvement of established venture capital firms like Sequoia Capital suggests that the market is poised for growth, indicating that AI security funding is not just a temporary trend but a foundational aspect of the AI landscape moving forward.

AI Vulnerability Detection: The Rising Importance

AI vulnerability detection has emerged as a vital component in the development and deployment of ai technologies. As AI systems become more integrated into everyday processes, the potential for vulnerabilities has increased. Companies like Irregular are at the forefront of this effort, using frameworks like SOLVE to evaluate model security rigorously. This new focus on vulnerabilities is not just about identifying existing issues but also about anticipating future risks that could arise as AI continues to evolve.

The ongoing research and methodologies utilized in AI vulnerability detection allow developers and organizations to strengthen their defenses significantly. By assessing models with cutting-edge techniques, AI firms can ensure that systems don’t just perform well but do so securely. This proactive approach is increasingly crucial as adversaries become more skilled at exploiting weaknesses in AI, making the capability to detect and mitigate these vulnerabilities before they manifest essential for any forward-thinking organization.

Evaluating AI Models: The Need for Robust Security Measures

The evaluation of AI models is critical, particularly concerning their security aspects. As firms like Irregular have shown, assessing a model’s performance goes beyond traditional metrics to include evaluating its resilience against various types of threats. This comprehensive model evaluation enables stakeholders to understand the potential vulnerabilities that may be exploited by malicious actors, ensuring that robust security measures are integrated throughout the development lifecycle.

Furthermore, with advancements in AI capabilities, the need for these evaluations is becoming even more pressing. As models grow more intricate, the surface area for exploitation widens, necessitating thorough testing against hypothetical attack scenarios. The insights gleaned from these evaluations inform better training practices and the establishment of security protocols, creating a safer environment for implementing AI technologies across various sectors.

Investment Insights: Sequoia Capital and the Future of AI Security

The investment by Sequoia Capital in Irregular is a significant indicator of where investors see the future of AI security heading. Sequoia’s backing underscores the belief that as AI technology progresses, so too must the measures to secure it. This level of investment also signifies a willingness to support innovative solutions that address the manifold risks associated with AI, creating a hopeful outlook for startups dedicated to this essential aspect of technology.

Investors are increasingly drawn to firms that can predict and protect against AI-induced vulnerabilities. Sequoia’s involvement not only provides Irregular with the necessary capital to continue unfolding its ambitions but also brings valuable market credibility. As a well-respected name in venture capital, Sequoia’s investment could catalyze further interest and partnerships in the AI security space, potentially leading to advancements in how AI systems are protected from emerging threats.

Emerging Risks in AI: Proactive Detection Strategies

As AI models become increasingly sophisticated, the emergence of new risks poses a significant challenge for developers and organizations. The proactive detection of these risks is crucial in order to mitigate potential threats before they are exploited. Companies like Irregular are pioneering innovative approaches, leveraging simulated environments and rigorous testing to uncover vulnerabilities that traditional testing might miss. This forward-thinking mindset is essential as the landscape of AI continues to evolve.

The implementation of strategies aimed at proactive risk detection allows organizations to stay ahead of the curve. For Irregular, their commitment to identifying emergent behaviors in AI models reflects a broader industry-wide shift toward more holistic security practices. By anticipating potential weak points, companies can devise targeted strategies that not only respond to existing threats but also prepare for challenges that have yet to manifest in the rapidly changing AI environment.

Irregular Funding: Fueling Innovation in AI Security Solutions

The injection of $80 million in funding for Irregular is a catalyst for innovation in the AI security sector. This funding enables them to continue refining their AI security measures and enhance their vulnerability detection framework. With a valuation now pegged at $450 million, Irregular is well-positioned to leverage this capital in developing cutting-edge solutions that address the multitude of threats posed by advanced AI systems.

As competition heats up in the AI landscape, the need for innovative security solutions becomes paramount. Irregular’s access to additional resources will likely lead to more comprehensive testing frameworks and improved model evaluations, setting new standards in AI security. This kind of funding not only supports the development of effective security measures but also signals to other players in the industry that investing in AI security is both necessary and lucrative.

AI Security Measures: Addressing New Threats Head-On

The evolving landscape of AI requires robust security measures that can effectively address new threats as they arise. With the increasing incidents of AI being used maliciously, firms like Irregular are pioneering methods to safeguard against such abuses. Their sophisticated approach to AI security involves not only identifying vulnerabilities but also actively working to prevent potential exploits before they can occur.

Security measures in the AI field must be dynamic and adaptable to keep pace with rapid advancements in technology. Irregular’s work exemplifies the importance of a proactive stance on AI security, where anticipating threats and building resilient systems becomes standard practice. As malicious actors develop more sophisticated techniques, the defenses put in place by companies must evolve accordingly, ensuring that the deployment of AI can proceed safely and responsibly.

Sequoia Capital’s Role in Transforming AI Security Initiatives

Sequoia Capital’s strategic investment in Irregular marks a pivotal moment for the AI security sector. Their support not only provides financial backing but also brings extensive networks and industry expertise to the table. This collaboration can significantly impact the development and proliferation of effective AI security measures. By investing in companies focused on addressing vulnerabilities, Sequoia is playing a vital role in shaping a future where AI technologies are utilized safely.

The role of investors like Sequoia extends beyond funding; it involves creating an ecosystem that encourages innovation and responsible growth within the AI sector. Their influence can galvanize other financiers to consider the importance of AI security, inflating the market’s focus on this critical need. As a result, we may see an influx of resources towards developing technologies that can effectively deal with inherent AI vulnerabilities, ultimately guiding safer adoption across various industries.

The Future of AI Security: Preparing for the Unknown

Looking ahead, the future of AI security is both promising and daunting. As technology advances, so too will the complexities associated with securing different AI systems. Companies like Irregular are preparing for the unknown by developing adaptive and scalable security solutions that can address vulnerabilities without hindering innovation. This foresight is essential as the industry braces for an era of increasingly autonomous AI operations.

In the face of emerging threats and constantly evolving challenges, a proactive and well-funded approach to AI security will shape the landscape for years to come. As firms enhance their capabilities to evaluate and secure AI models, the potential for disruptive and beneficial applications of AI continues to grow. Investing in AI security now is not just about addressing current vulnerabilities; it is about building a resilient future where AI technologies can flourish without compromising on safety or ethics.

Frequently Asked Questions

What recent funding has Irregular received for AI security measures?

Irregular recently secured $80 million in funding led by Sequoia Capital and Redpoint Ventures, valuing the company at $450 million. This funding aims to enhance AI security measures, particularly regarding AI vulnerability detection and evaluation.

How does Irregular’s SOLVE framework contribute to AI security funding initiatives?

Irregular’s SOLVE framework is designed for scoring a model’s vulnerability detection capabilities. This innovative approach plays a significant role in attracting AI security funding by demonstrating effective evaluation methods that address emerging risks and security challenges in the AI landscape.

Why is securing AI models important for investors considering AI security funding?

Securing AI models is crucial for investors because as AI technologies evolve, the potential risks associated with them increase. Investments in AI security funding focus on developing robust measures to protect against vulnerabilities, ensuring that these advanced models can operate safely in real-world applications.

What does the recent $80 million funding round indicate about the future of AI vulnerability detection?

The $80 million funding round for Irregular signifies a growing confidence in the future of AI vulnerability detection technologies. This investment reflects the high demand for innovative solutions that can predict and address security risks before they occur, underscoring the importance of proactive AI security measures.

How is Irregular planning to address emergent risks in AI models with its funding?

With the new funding, Irregular plans to enhance its capabilities in detecting emergent risks through advanced simulations. Their approach involves testing AI models in complex network scenarios to identify vulnerabilities before models are deployed, thus improving the overall effectiveness of AI security funding.

What are the implications of Sequoia Capital’s investment in AI security firms like Irregular?

Sequoia Capital’s investment in Irregular highlights a strategic focus on AI security firms, reflecting a broader industry acknowledgment of the critical need for robust AI security measures. This backing aims to accelerate advancements in AI vulnerability detection and model evaluation as the industry matures.

How does Irregular’s approach to AI security differ from traditional methods?

Irregular’s approach to AI security uses simulated environments to rigorously test models, allowing identification of both vulnerabilities and defenses in ways that traditional methods may not accomplish. This innovative strategy positions them at the forefront of AI vulnerability detection within a rapidly evolving market.

What challenges do AI security measures face as models become more capable?

As AI models grow in sophistication, AI security measures face the challenge of adapting to rapidly evolving threats. Irregular’s founders acknowledge that with increased capabilities come more security challenges, necessitating continuous advancements in AI vulnerability detection and proactive defense strategies.